Low-Flow (7-Day, 10-Year) Classical Statistical and Improved Machine Learning Estimation Methodologies

Abstract

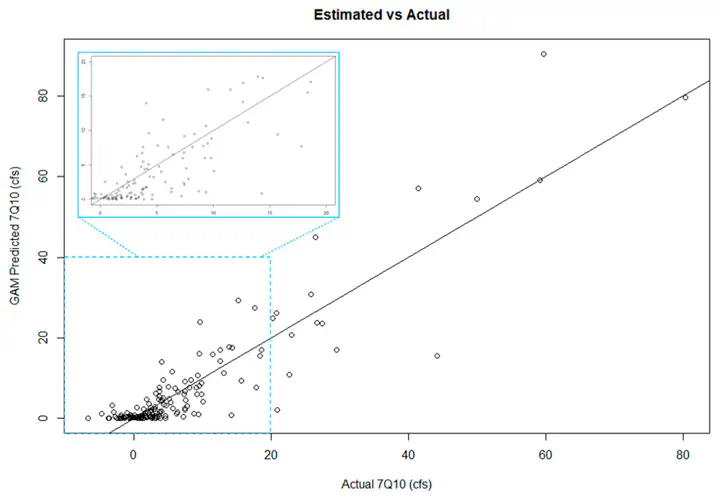

Water resource managers require accurate estimates of the 7-day, 10-year low flow (7Q10) of streams for many reasons, including protecting aquatic species, designing wastewater treatment plants, and calculating municipal water availability. StreamStats, a publicly available web application developed by the United States Geologic Survey that is commonly used by resource managers for estimating the 7Q10 in states where it is available, utilizes state-by-state, locally calibrated regression equations for estimation. This paper expands StreamStats’ methodology and improves 7Q10 estimation by developing a more regionally applicable and generalized methodology for 7Q10 estimation. In addition to classical methodologies, namely multiple linear regression (MLR) and multiple linear regression in log space (LTLR), three promising machine learning algorithms, random forest (RF) decision trees, neural networks (NN), and generalized additive models (GAM), are tested to determine if more advanced statistical methods offer improved estimation. For illustrative purposes, this methodology is applied to and verified for the full range of unimpaired, gaged basins in both the northeast and mid-Atlantic hydrologic regions of the United States (with basin sizes ranging from 2–1419 mi2) using leave-one-out cross-validation (LOOCV). Pearson’s correlation coefficient (R2), root mean square error (RMSE), Kling–Gupta Efficiency (KGE), and Nash–Sutcliffe Efficiency (NSE) are used to evaluate the performance of each method. Results suggest that each method provides varying results based on basin size, with RF displaying the smallest average RMSE (5.85) across all ranges of basin sizes.